focused on a government contest to build a humanoid robot capable of acting as a first responder in dangerous situations. The challenge of dealing with the

has focused the military on what talents are needed in the next generation of robots, where the machines need to perform tasks as well as send back images of a situation.

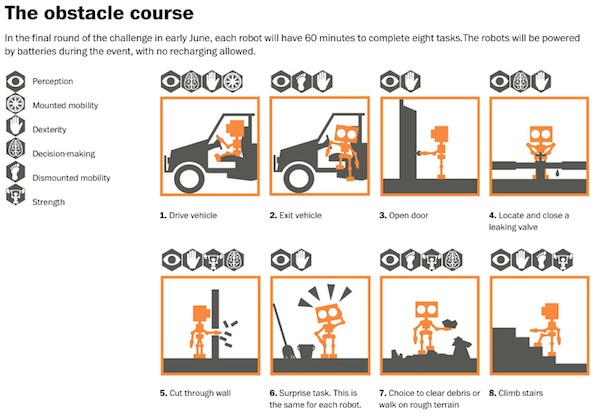

The competition includes a variety of activities in the obstacle course, from driving (!) to opening doors and closing valves. This is advanced stuff (assuming they can do it) and shows how fast things are moving in robo-tech.

Certainly we all want robots that can deal with Fukushima-type disasters, where radiation threatens the health of humans trying to deal with the mess for even a short period of time. But you can see where this is going, namely to the eventual elimination of some police and fireman jobs. Not all, of course, but those jobs (plus pensions) are very expensive for local governments. (The average San Francisco police pension as of 2011 was $95,016.) Police deserve a generous retirement for what they put up with, but governments tend to look at the dollar cost of such services and how to cut it.

The robots being built for the competition are not that police or enforcement category, but any bot that can drive a car and open a door can also be programmed to fire a gun. Military robots will eventually replace some soldiers as well, continuing the reduction of the employment and opportunity universe.

Military pushes for emergency robots as skeptics worry about lethal uses, Washington Post, May 16, 2015It’s 6-foot-2, with laser eyes and vise-grip hands. It can walk over a mess of jagged cinder blocks, cut a hole in a wall, even drive a car. And soon, Leo, Lockheed Martin’s humanoid robot, will move from the development lab to a boot camp for robots, where a platoon’s worth of the semiautonomous mechanical species will be tested to see if they can be all they can be.

Next month, the Pentagon is hosting a $3.5 million, international competition that will pit robot against robot in an obstacle course designed to test their physical prowess, agility, and even their awareness and cognition.

Galvanized by the Fukushima Daiichi nuclear power disaster in 2011, the Defense Advanced Research Projects Agency — the Pentagon’s band of mad scientists that have developed the “artificial spleen,” bullets that can change course midair and the Internet — has invested nearly $100 million into developing robots that could head into disaster zones off limits to humans.

“We don’t know what the next disaster will be, but we know we have to develop the technology to help us to address these kinds of disaster,” Gill Pratt, DARPA’s program manager, said in a recent call with reporters.

The competition comes at a time when weapons technology is advancing quickly and, with lasers that can shoot small planes out of the sky and drones that can land on aircraft carriers, piercing the realm of science fiction.

But some fear that the technological advancements in weapons systems are outpacing the policy that should guide their use. At a meeting last month, the U.N. Office at Geneva sponsored a multi-nation discussion on the development of the “Lethal Autonomous Weapons Systems,” the legal questions they raise and the implications for human rights.

While those details are being hashed out, Christof Heyns, the U.N.’s special rapporteur, called in 2013 for a ban on the development of what he called “lethal autonomous robots,” saying that “in addition to being physically removed from the kinetic action, humans would also become more detached from decisions to kill — and their execution.”

Mary Wareham, the global coordinator for the Campaign to Stop Killer Robots, a consortium of human rights groups, said the international community needs to ensure that when it comes to decisions of life and death on the battlefield, the humans are still in charge.

“We want to talk to the governments about how [the robots] function and understand the human control of the targeting and attack decisions,” she said. “We want assurances that a human is in the loop.”

Organizers of the DARPA Robotics Challenge are quick to point out that the robots are designed for humanitarian purposes, not war. The challenge course represents a disaster zone, not a battlefield. And although the robots may look like the Terminator and move with the rigidity of Frankenstein’s monster, they are harmless noncombatants, with the general dexterity of a teetering 1-year-old. During the challenge, DARPA officials expect a few of the robots to end up on their keisters looking more helpless than threatening.

Their cognitive ability is not very advanced, either. Even though they are loaded with thousands of lines of code and able to communicate wirelessly with their human overseers, the robots are limited to simple tasks, such as opening doors or walking up stairs.

But although the aim of the contest is to help develop robots to use in humanitarian missions, such as sifting through the rubble after the earthquake in Nepal, officials acknowledge that as the technology advances, they could, one day, be used for all sorts of tasks, from helping the elderly, to manufacturing, and, yes, even as soldiers.

“As with any technology, we cannot control what it is going to be used for,” Pratt said. “We do believe it is important to have those discussions as to what they’re going to be used for, and it’s really up to society to decide that. But to not develop the technology is to deny yourself the capability to respond effectively, in this case to disasters, and we think it’s very important to do that.”

A contest is born

After the 2011 earthquake and tsunami caused the meltdown at the Fukushima Daiichi nuclear power plant, workers inside the plants tried to vent the hydrogen that was dangerously building up in the reactors. But with workers’ exposure to radiation building, they were forced to evacuate.

The Pentagon had helped dispatch robots to the scene to address this situation — to go where it was too dangerous for humans and turn the valves that could have dissipated the hydrogen, potentially preventing the explosions. But the officials quickly realized that it would be difficult to direct the robots to do something like turning valves in a remote location from what had been their primary mission: defusing roadside bombs, Pratt said.

Further, it took weeks to train the personnel on how to use them for those new purposes. And by the time the robots were deployed to the scene, it was too late for them to do anything but survey the damage.

That served as a wake-up call at the Pentagon and, particularly, at DARPA, where officials felt as if robots should be able to help more in such situations.

And so two years after the nuclear meltdown, DARPA held the first stage in its Robotics Challenge, a virtual competition in which teams moved their robots through a computer-animated world. Then, a few months later, it held another contest, with real obstacles and tasks.

But the finals, to be held June 5-6 in Pomona, Calif., will be far more difficult — with times to perform the tasks cut drastically and with communications between the teams and their robots at times limited — a testament to how far the technology has advanced since the last competition.

With their “human supervisors” sequestered in a remote location, unable to see the course, the robots will first drive an all-terrain vehicle to the course while avoiding barriers set in their path.

Then they’ll come to a door, which they’ll have to open and walk through. Inside will be tasks such as turning a valve, cutting a hole in a wall, walking over uneven terrain and climbing stairs, all designed to simulate a disaster zone. There will be eight tasks in all, including one that’s a surprise, and the teams will have 60 minutes to complete them.

Most of the entrants resemble humans, with two arms and two legs, and could be cast in Hollywood’s next futuristic blockbuster. But there is also “CHIMP,” developed by Carnegie Mellon University, a squat, long-armed machine that uses wheeled treads to get around. Another, named “RoboSimian,” is a four-legged “ape-like” creature developed by NASA that, depending on how its limbs are situated, is also strikingly arachnid-like.

In all, there are 25 teams from all over the world, from companies, academic institutions and government agencies, all vying for the $2 million first prize. (Second is $1 million; third is $500,000.)

Lockheed Martin, the world’s largest defense contractor, has pulled together a team of engineers and scientists to develop Leo, an Atlas robot made by Boston Dynamics that several of the teams are using. Although the robotic skeletons are similar, the technological DNA inside the robots is vastly different.

Leo sees with a combination of radar that uses lasers to detect the world around it and cameras that give the team a view of what’s immediately in front of the robot. It also has the ability to make limited sense of its environment, such as opening a door or navigating stairs.

“Like, ‘Oh, I see a valve. I know that valves can be turned,’?” said Todd Danko, the principal investigator for Lockheed Martin’s team TROOPER. “And a human operator can say, ‘Turn the valve.’ And the robot knows what turning means. And it knows that turning is associated with valves. And it knows what valves are. And so it can go ahead and just do that.”

At one point during the testing, though, Leo lost its balance and started to fall face first until its harness caught it and held it up. It hung there, helpless, slumped like a drunk using the wall as a crutch.

Thorny questions

Vegas oddsmakers might make Running Man the favorite to win next month. It finished second in the 2013 competition, and since then, developers at the Institute of Human and Machine Cognition in Florida have been formulating a “whole body control algorithm” to help it walk and balance better and find “the sweet spot for human and machine teamwork.”

But they also received a boost when Google announced last year that its Schaft robot, which won the 2013 competition, was pulling out of the contest. Google declined to comment on why it dropped out. But many in the robotics community immediately suspected that the decision was tied to a reluctance to work with the Pentagon.

Whether that is true, DARPA is keenly aware that the fast-moving technology raises “difficult societal, ethical and legal questions,” DARPA spokesman Rick Weiss said.

That’s why it has sponsored another competition, this one for high school students who were asked to make a video discussing the role robots should play in society.

The contest was specifically geared toward young people because, he said, they will likely come of age as the technology matures and become “the first generation that will probably grow up in and live in a society that is so fully infused with robotic technologies.”